The Computational Cost of FHE Or Why Is FHE So Slow?

Now that we’ve established the mathematical foundations of FHE and explored the concept of homomorphism, it’s time to look ahead to what FHE deployments actually entail. While the promise of computing on encrypted data is immense, that power comes at a steep computational cost. Even with modern schemes and libraries, FHE operations are orders of magnitude slower and heavier than their plaintext equivalents. Below, we’ll explore why FHE is still so slow, what causes bottlenecks, and how researchers are working to improve efficiency.

Performance Bottlenecks in FHE Computation

FHE’s slowness isn’t due to a single cause – it’s a combination of factors inherent to how these encryption schemes work. Key performance bottlenecks include:

- Ciphertext expansion: In the most efficient FHE schemes, ciphertexts can be just 4–10× larger than plaintexts. However, in less optimized implementations, the expansion factor can exceed 100 000×, making even basic operations (e.g., addition or multiplication) highly strenuous on encrypted data.

- Noise Growth and Multiplicative Depth: Most FHE schemes are “noisy”, and beyond a certain limit, one must invoke a special refresh computation to reduce noise.

- Bootstrapping (Noise Reset) Overhead: Bootstrapping is the ingenious procedure that resets ciphertext noise and enables unlimited depth. However, it is a pricey “checkpoint”: it might take seconds (or more) of computation to refresh one ciphertext.

- Complex Operations & Data Types: FHE works naturally with additions and multiplications on encrypted integers or polynomials. But comparisons, divisions, or non-linear functions have to be approximated by polynomial operations. This further slows things down.

- Memory and Bandwidth Constraints: The large size of FHE ciphertexts means high memory usage and slow data movement. Even small computations can demand substantial resources, with memory usage reaching hundreds of gigabytes and runtimes extending to tens of seconds on powerful servers..

In summary, FHE imposes heavy computation costs due to operating on large, encrypted structures with accumulated noise. On a positive note, each of the above-mentioned bottlenecks can be significantly reduced through known optimizations and ongoing hardware developments.

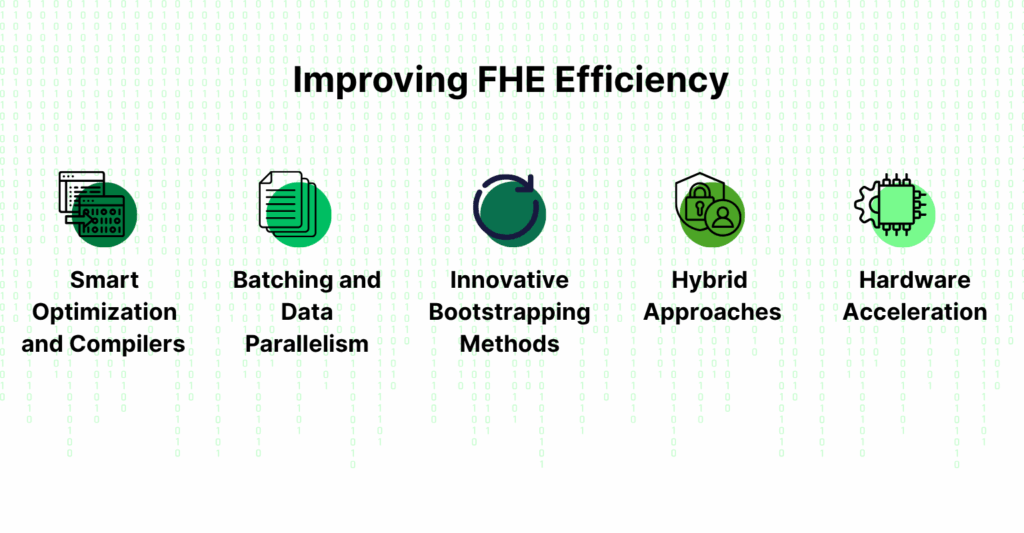

Efforts to improve efficiency

The good news is that FHE performance has been steadily improving, and a lot of research is focused on making it faster.

- Smarter Circuit Optimization and Compilers: One way to deal with FHE’s cost is to reduce the “work” it needs to do. Researchers have built tools that act like optimizing compilers for encrypted computation. While these don’t change the fundamental cost of each primitive operation, they can make a big difference by shortening the encrypted circuit or using smaller parameters whenever possible.

- Batching and Data Parallelism: Schemes like BFV, BGV, and CKKS support a feature called batching (or packing). This allows one ciphertext to encrypt not just a single value, but a whole vector of values (e.g. 100s or 1000s of numbers) and operate on them simultaneously. This massively improves throughput. The computational cost per ciphertext is roughly the same, but you get a lot more work done per operation.

- Better Bootstrapping Methods: Bootstrapping remains FHE’s most computationally expensive operation, but it has improved dramatically over the past decade. Early methods took many minutes per operation, while today’s state-of-the-art techniques complete bootstrapping in a fraction of that time. As bootstrapping speeds continue to improve, fully unbounded FHE is becoming increasingly viable for specific practical tasks.

- Hybrid Approaches: In practice, one doesn’t always have to use pure FHE for an entire application. Researchers and engineers often combine FHE with other privacy tech to get a better trade-off.

- Hardware Acceleration: Because FHE computations consist of lots of mathematical operations on large numbers (but embarrassingly parallel as noted), they are ripe for hardware acceleration. There’s active work on using GPUs, FPGAs, and ASICs to speed up core FHE routines. Early results are promising – specialized hardware can often run FHE tasks several times faster than a general-purpose CPU

Closing Thoughts

Admittedly, Fully Homomorphic Encryption is slow today. The costs in runtime and memory are the primary reason why FHE isn’t yet ubiquitous in everyday applications, despite its promise. Fundamentals behind how FHE works – large ciphertexts, noise management, and mathematical operations – burden us with significant computational weight.

However, the gap between FHE and unencrypted processing, while still huge, is closing bit by bit. What took hours a few years ago might take minutes now, and perhaps seconds in the near future. As optimizations continue – be it through better algorithms, hybrid solutions, or custom ASICs – the dream of using FHE seamlessly in real-world systems comes closer to reality.

FHE’s journey is a reminder that privacy isn’t free – but with enough ingenuity, we can strive to make it affordable. In future posts, we’ll continue exploring this journey. Next up we are going to compare four different FHE schemes: BGV, BFV, CKKS, and TFHE and you will see each of these has different approaches and tradeoffs which will steer attempts to optimize today’s highlighted issue – FHE performance.