Homomorphism is our next step in understanding FHE. If you’re just starting to learn about Homomorphic Encryption, you might be daunted by the amount of abstract math. You should be! A lot of great minds put a lot of effort into making these schemes both secure and performant. In this post, we would like to introduce the concept of homomorphisms in simplified terms. If you can get to the end of the post, you should be able to have a good understanding of what homomorphisms allow, what they don’t, and how we use them. This Blog was inspired and written by Noam Kleinburd, Director of Cryptography in Chain Reaction.

Homomorphism – Basic Definitions

We’re going to assume that you, the reader, have some background in set theory, group theory, and know the basics of modular arithmetic.

Given two groups ![]() ,

,

a homomorphism is a function h from S1 to S2 that preserves the following property: ![]() .

.

If you’re already feeling lost, don’t worry! Let’s go over this again with a concrete example:

First, we’ll use integers as our sets: ![]() .

.

For our group operation, we’ll use addition: ![]()

Finally, our homomorphism is a simple multiplication by two:

![]()

It’s easy to see that ![]() .

.

Multiplication, however, isn’t preserved: ![]() .

.

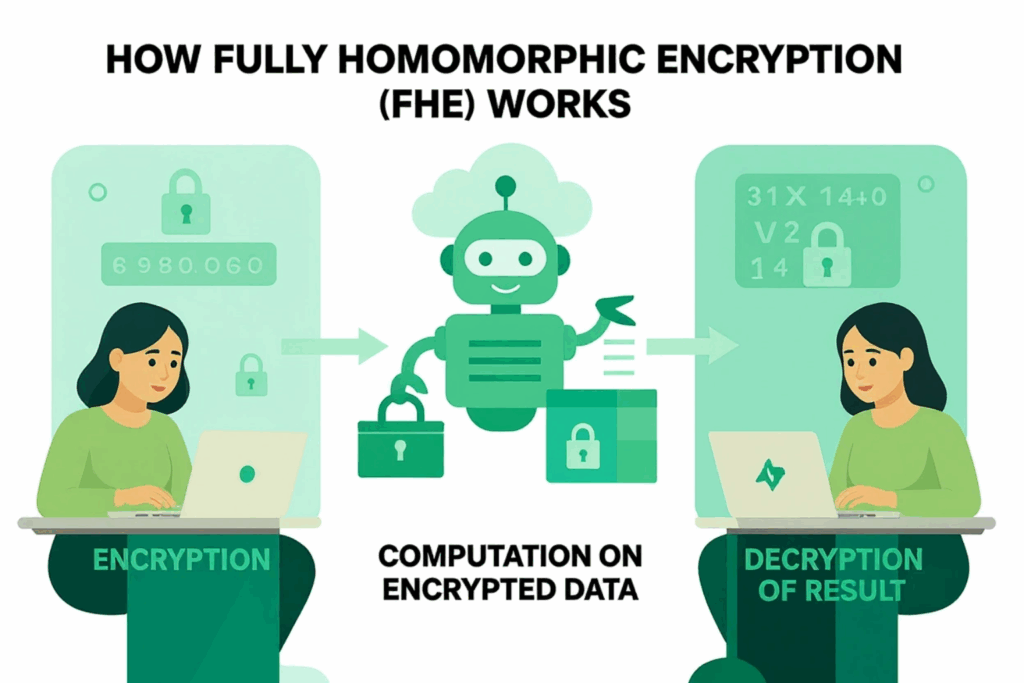

Some cryptographic schemes let you perform one operation; they’re called partially homomorphic. If the scheme can perform both addition and multiplication, it’s called fully homomorphic.

In the next sections we’ll look at some partially homomorphic schemes.

RSA

RSA is a well-known cryptosystem publicized by Rivest, Shamir, and Adleman in 1977. It is asymmetric, which means one party generates a private key and public key. The public key is generated by picking two large primes and multiplying them:

![]() The public key also contains a number e, which is used to encrypt messages: pk = (N, e). The construction of the private key is slightly more complex. We use the multiplication of p-1 and q-1:

The public key also contains a number e, which is used to encrypt messages: pk = (N, e). The construction of the private key is slightly more complex. We use the multiplication of p-1 and q-1: ![]()

and calculate ![]()

This lets us define encryption and decryption:

![]()

We won’t go into the details of why decryption is the inverse of encryption, or security aspects of this scheme.

Note: This is a description of “textbook” RSA. It is not secure in all cases, and you definitely shouldn’t implement it yourself. Use well-established libraries for cryptography whenever possible.

Notice that the sets we’re using are numbers modulo N, often denoted: ![]()

Let’s see what happens when we multiply two ciphertexts: ![]()

Success! We can use RSA to multiply encrypted numbers. However, it’s important to remember that this multiplication is modulo N. If your original numbers are large an overflow may occur, and we can’t add numbers or divide with flooring.

Paillier

This next example is a little more complex and has some very interesting properties. To keep things simple, we’ll modify the scheme to be less secure, so don’t implement this yourself either.

The public key is generated in a similar way as RSA: pick large primes p and q (of equal length) and multiply them.

![]() .

.

The private key is calculated as:

To encrypt a message m, we should pick a random number 0 < r < N , but we’ll use the random number 1 (chosen by fair dice roll). Then compute the ciphertext as

![]() .

.

The decryption formula is long and scary, so we’ll leave it out of this post,

(for those of you who are feeling brave: ![]() ).

).

Let’s use the encryption function and compute on some ciphertexts:

This is something new. We multiplied our ciphertexts, and it resulted in adding our messages.

As an exercise, try adding the random number r to the encryption, and see that the homomorphic property still applies.

Conclusion

These are real cryptosystems that are used in our day-to-day life. Each of them is partially homomorphic, which allows some computations to be made, but neither of them allows both addition and multiplication of messages.